Information Lifecycle Management

Introduction

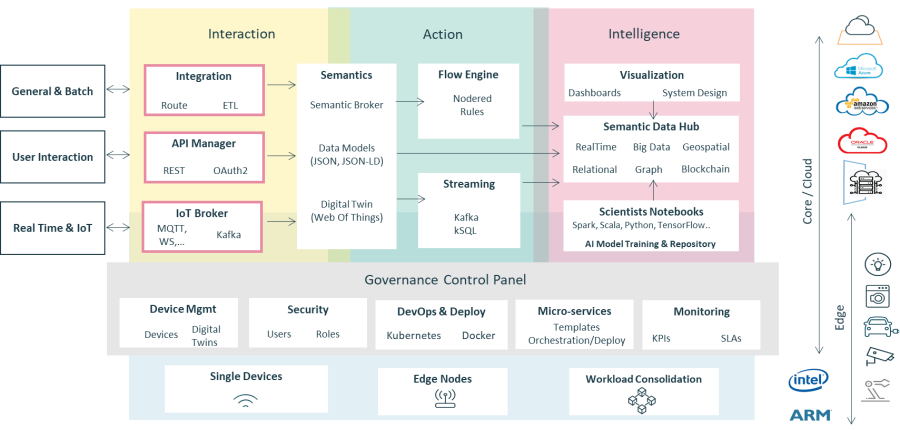

This section describes the high-level characteristics of the Platform organized by logical layers.

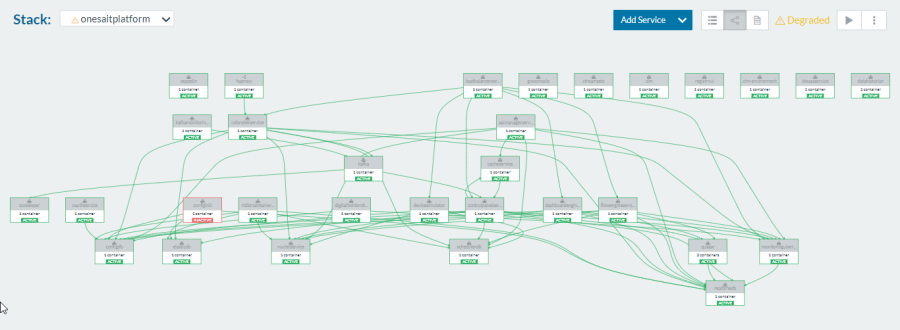

The following diagram explains the logical flow of information in the platform, from the data producers to the information consumers through the Platform.

The solution's information flows solution will navigate through the Platform, (ingestion and processing, storage, analytics and publication) from the data producers to the information consumers, following the paradigm of "listen, analyze, act".

These information consumers and producers will be both existing systems and new vertical components that will be incorporated into the solution through simple basic integrations, and, because they are based on the platform, they will ensure the ability to scale the solution over time in a robust, controlled manner.

The flow of information in the platform is detailed next:

Ingestion and processing

The proposed solution allows for the ingestion of information from real-time data sources of virtually any nature type, from devices to management systems. These real-time information gathering capacities are called "Real Time Flow", and they include the information extraction capabilities of social networks, allowing users to analyze real-time opinions, making them act as "human sensors".

This real-time information from devices, systems and social networks accesses the platform through the most appropriate gateways (multiprotocol interfaces) for each system, is then processed, reacting in real time to the configured rules, and finally remains persisted in the storage module's Real Time Database (RealTimeDB).

On the other hand, the rest of the information coming from more generic sources ("Batch Flow") that is obtained by means of processes of extraction, transformation and loading in batch mode (not real time) accesses the solution through the mass loading module of information (ETL).

Storage

The information that comes from the intake and processing is stored in the DataHub of the platform.

This semantic layer is supported by a series of repositories exposed to the rest of the layers, which hide their technological infrastructure underlying the modules that access their information. We call this the Semantic Data Hub.

In this way, depending on each project's requirements (real-time and historical information volumes, mostly reading or writing accesses, greater amount of analytical processes, pre-existing technologies in the clients, etc.), the most adequate infrastructure is provided. The repositories that make up this module are the following:

- Real Time Data Base (RealTimeDB): this database is designed to support a large volume of insertions and online queries very efficiently. The platform abstracts users from the underlying technology, allowing the use of documentaries databases such as Mongo, BD Time Series, relational ones, ...

- Historical and Analytical Data Base (HistoricalDB): this storage is designed to store all information that is no longer part of the online world (for example, information from previous years that is no longer queried) and to support analytical processes that extract knowledge from this data (algorithms).

- Staging Area: allows to store raw files that are unprocessed by the platform, for their later ingestion. HDFS is usually used as storage.

- GIS Data Base: is the database that stores GIS information. It can be the same as RealTimeDB, or the GIS Data Base can be used, depending on the case.

Analytics

All the information stored in the Platform can be analyzed together with a holistic vision, that is to say, allowing information to be crossed over time, between vertical systems and even with more static data that has previously been fed to the Platform (information from cadastre, rent by neighborhood, typology of each area, etc.)

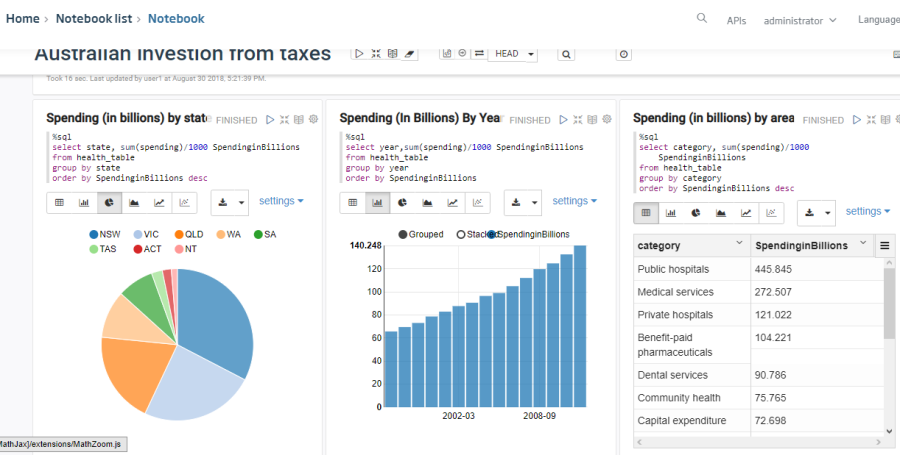

For this, a web module called Scientists Notebooks is available. It allows specialized teams to develop algorithms and IA/ML models from the web environment provided by the platform.

For non-technical profile user, another tool is available that allows the graphic exploitation of the information. It is the Data Mining Tool.

These models can be published in a simple way to be consumed by other layers and external systems.

Besides, the platform includes Tools to exploit information, specifically a complete dashboard engine that allows the visual creation of very powerful dashboards.

Publication

The Platform offers capabilities to make all the information previously stored in the Platform available to applications and verticals.

The platform is able to publish the information in different ways. We highlight four mechanisms for exposing information:

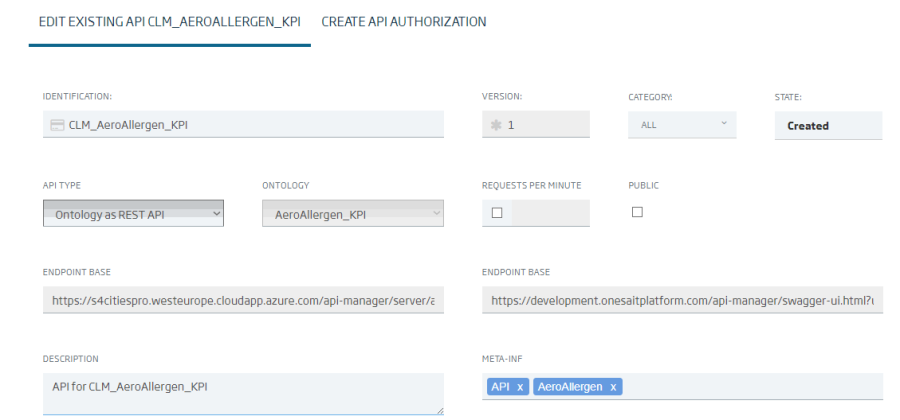

- Api Manager: publishing the information stored in the storage module and the algorithms in the form of REST APIs that can be managed individually and with monitoring capabilities of their consumption.

- The Api Manager allows the Platform to interact with all types of systems and devices through the most typical digital channels, such as the Web, smartphones, tablets and other systems capable of consuming information through the REST protocol.

- These exposed APIs can also be individually secured, limiting access to different users (or groups of users) to each of them, or even enabling public information exposure to be consumed by any user, even those not registered on the Platform.

- Publication/subscription: allows the integration with different systems, which can be subscribed to ontologies, that is to say: When changes are made in the information (inserts, updates and deletes), then the systems integrated with the Platform are updated automatically.

- It also allows the Platform to interact with all types of systems, invoking services from them. The Platform can "ask" the different systems, with the frequency deemed necessary, to acquire or share information.

- Open Data Portal: The platform allows to configure the export of its entities to an Open Data Portal.

- Holistic Viewer/City Landscape Manager (CLM): this module acts as a Holistic Viewer of the Platform and offers, among other functionalities, the different management roles:

- Advanced display of status information.

- Integration of information managed by the platform for correlation, analysis and behavior and event forecasting.

- Intelligence based on the analytical process to anticipate actions that contribute to eliminate or mitigate the effects of undesired incidents that could be anticipated.

- Advanced capabilities of citizen perception sentiment analysis regarding the actions of state management.

Integrated Data Management

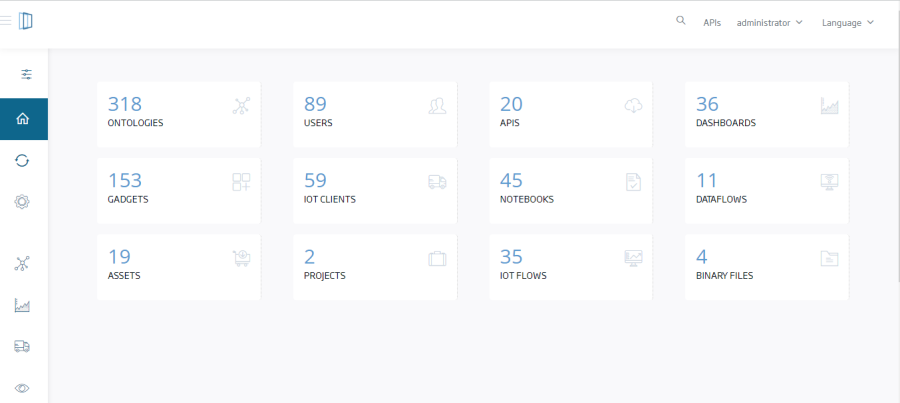

With the goal of providing a holistic and multidisciplinary management experience of the state, the platform can be managed from a single centralized web console that provides basic services of operation, control, configuration and management (user management, access, audit, security, supervision and monitoring, help, etc.).

In this line, the platform has a multi-device, customizable, intuitive, easy-to-use web interface. The use of standardized interfaces and a structured representation of the information facilitate the rapid learning of its use and therefore the administrator's productivity. It also allows the adaptation of its design to the project's needs, allowing the exchange of CSS files to use own banners or use a more fitting color code aligned with those used by the state, so that administrators have a look-and-feel similar to the one they are used to.

Next, we describe the main management elements of the centralized web console of the Platform, which will allow the solution to grow both on the horizontal axis (more vertical services), and on the vertical axis (more horizontal services), as well as managing the growth in number of consumers and producers (users, systems or devices) on existing services.

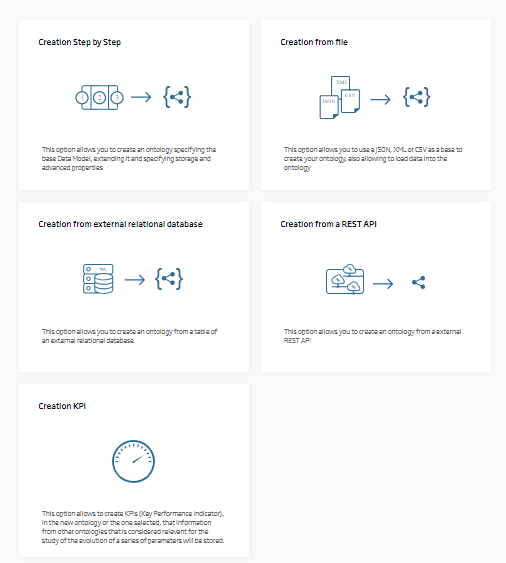

- Ontology Management: Enabling Data Governance in the platform, that is, allowing administrators to manage the information models exchanged between verticals, horizontals and Platform. Having the ability to perform this management online on the console allows for the progressive incorporation of new vertical and horizontal services, previously modeling the information they will send. Having a centralized management of semantic information models provides a single language for communication through the Platform, even if they are of different domains and natures.

- Management of Producers and Consumers of information: The centralized console of Onesait Platform also enables the software configuration of the devices connected to the Platform, allowing to manage their security tokens. This allows updating the internal software of the Gateways connected to the Platform and also providing different permissions to new or existing devices connected to the Platform. In this way, as the solution scales over time, the operator can perform a centralized management of all the devices from the console itself.

- Real-time Rules Management: This administration console element allows the configuration of the real-time rules that react either periodically or upon the arrival of new ontologies to the Platform. We will go into greater detail in the rules in the Data Analytics Service section, but bear in mind that the management of these rules is also done from the centralized console, thus simplifying its management.

- Device Management (Assets): In those cases where the Platform's information producer or consumer is a device, onesait Platform also allows the management of the physical part of the sensor, actuator or gateway, that is to say, to carry out an inventory of its make, model, serial number, etc., including also its location (variable over time), through its geolocation in the georeferencing service.

- Administrator tools: The console has a section oriented to support the administrators in their day-to-day management, providing utilities of several types: operation consoles on the information repositories, ontology format validation, in-execution process status monitoring (e.g. information historicization processes, programmed rules, etc.)

- API Management: onesait Platform console also allows to centrally manage the APIs that are exposed to the exterior. This ranges from that API's access policies configuration (public, for certain users, etc.) to the monitoring of the use and bandwidth consumed by each one of them.

- Social Media Management: From the console itself, social media listening mechanisms can also be programmed to incorporate this information into the platform's knowledge base. Currently, the sources of Twitter, Instagram, Facebook and even web "crawlers" are integrated and able of "browsing" through web pages to extract information that may be highly important.

- Report Management: Management of Jasper Reports templates to be used for printing reports in the Platform.

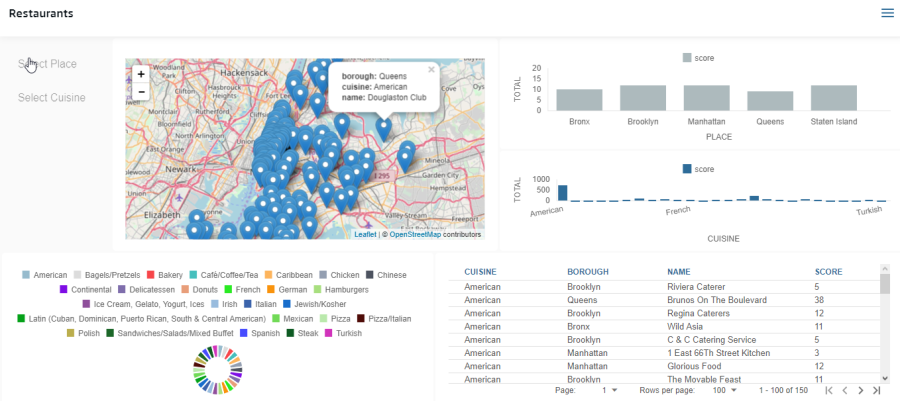

- Visualization Utilities: Enables the creation of dashboards directly from the web console by configuring a set of "widgets" that are ordered and structured from the web interface itself.

- Management of Analytics algorithms: The console also centralizes the algorithms deployed in the Platform and created within the Data Analytics Service. The web console acts as an interface for the development of these algorithms and allows centralized storage, and therefore enables the sharing of the algorithm with other users allowing collaborative work in them.

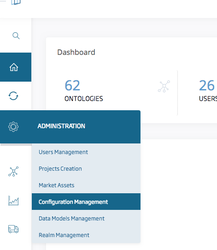

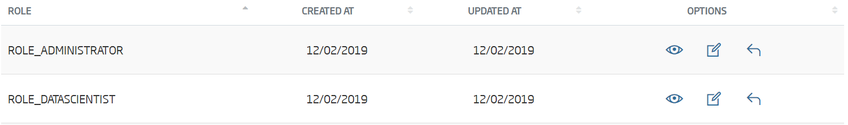

- Administration: Finally, the Platform includes the usual functions of registering, deleting, modifying and consulting users and managing their permissions to access the information on the Platform: It also includes more powerful management capabilities, such as Connections (clients connected to the Platform in real time), ontology size monitoring and management of integration operations with external services (integration coding, visibility configuration to users, etc.).

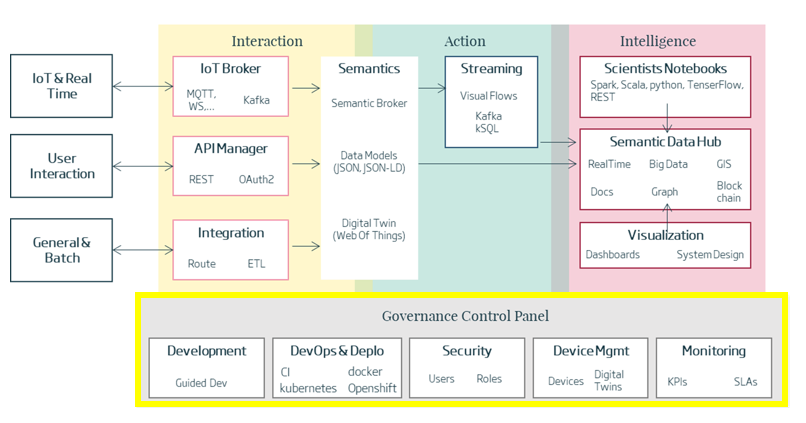

Configuration & Governance

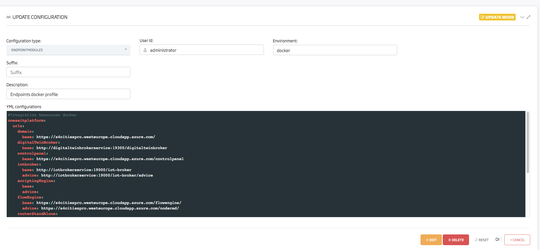

The Platform's configuration services, besides being accessible for its management from the already built web console thus allowing its immediate use after the implementation, are also exposed as REST APIs, allowing its consumption from other existing systems, enabling both information query and its handling (modify or create new configurations) from existing systems or included in the future roadmap.

Being able to ensure that from the beginning of the project that a Platform with transversal and simple management capabilities is available, will result in a minimization of implementation times and an orderly and agile operation of the solution.

The Platform Control Panel is a complete web console that allows a visual management of the elements of the platform through a web-based interface. All this configuration is stored in a configuration database (ConfigDB). Within its functionality it adds:

- Development control panel: integrates all the tools in the platform that the developer will use when creating applications, including creation ontologies, rules, panels, assigning security, etc.

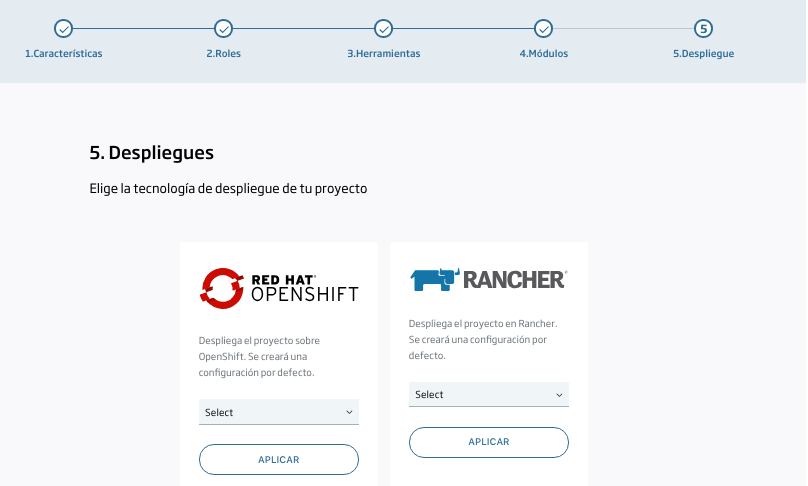

- DevOps & Deploy: this console allows you to configure the tools for the continuous integration of the platform, and also to implement the platform instances and the additional components that a solution may require.

- Security: allows you to configure all security aspects of the solution, such as the user repository (LDAP, the platform itself), and define and manage users and roles, etc.

- Device management: allows managing and operating the devices of the IoT solutions.

- Monitoring: helps monitoring the platform and solutions through KPIs, alerts, etc.

The onesait Platform Management Web Console is built with diverse capabilities that are practically out-of-the-box, such as accessibility, usability, multi-language capabilities, etc., facilitating the maximum management of Platform operators.

Let's see some of its capabilities:

- Administration of the concepts managed by the platform from a Web UI:

- Entity Modeling:

- Management of Configurations:

- Identity management:

- Deployment of platform modules:

- Monitoring of the global state of the platform:

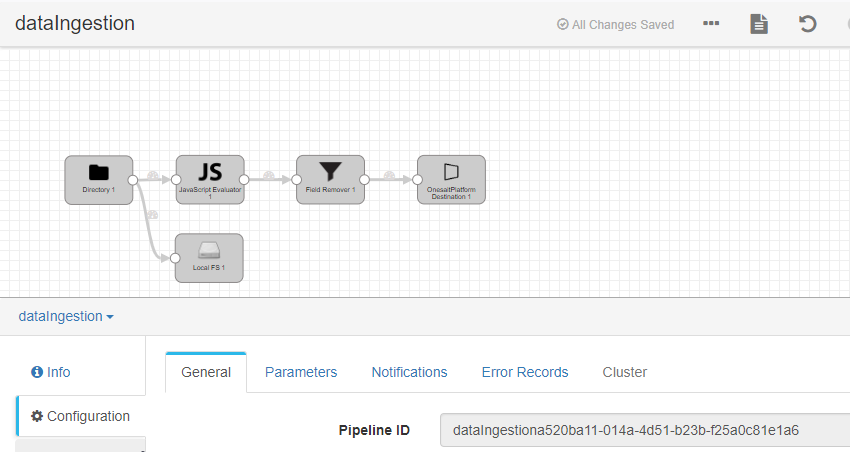

- Visual configuration and execution of ingestions:

- Creation, publication of REST APIs in a simple, guided way:

- Visual creation of dashboards with drill-down:

- Development of analytical models from Web environment for data scientists:

And another set of tools and capabilities that allow the complete management of the solutions from the Control Panel: publication of web applications, file management, etc.